The n n-ary operations in P are just probability distributions on an n n-element set. (Operadically, the point is that both FinStat FinStat and are algebras of an operad P whose operations are convex linear combinations.

Relative entropy plus#

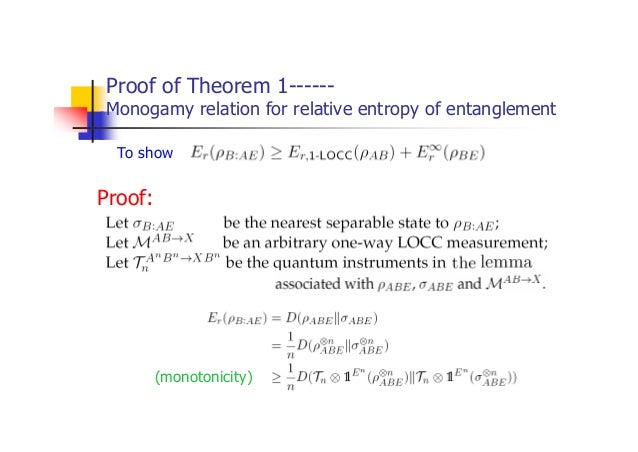

Intuitively, this means that if we take a coin with probability P P of landing heads up, and flip it to decide whether to perform one measurement process or another, the expected information gained is P P times the expected information gain of the first process plus 1 − P 1-P times the expected information gain of the second process. Normalised entropy is the ratio between observed entropy and the theoretical maximum entropy for a given system. Relative Entropy (Part 2): a category related to statistical inference, FinStat, \mathrm to the corresponding convex linear combination of numbers in. The relative entropy I was asking about is what's commonly referred to as normalised entropy as the term 'relative entropy' is also used for KullbackLeibler divergence.Relative Entropy (Part 1): how various structures important in probability theory arise naturally when you do linear algebra using only the nonnegative real numbers.I’ve written about this paper before, on my other blog: Information Theory provides us with a basic functional, the relative entropy (or Kullback-Leibler divergence), an asymmetrical measure of dissimilarity. A Bayesian characterization of relative entropy. We interpret relative entropy in terms of both coding and diversity, and sketch some connections with other subjects: Riemannian geometry (where relative.But since some people might be put off by the phrase ‘category-theoretic characterization’, it’s called: Now Tobias and I have a sequel giving a category-theoretic characterization of relative entropy. You may recall how Tom Leinster, Tobias Fritz and I cooked up a neat category-theoretic characterization of entropy in a long conversation here on this blog.

0 kommentar(er)

0 kommentar(er)